OpenStack Swift Integration with Ceph

Overview

In this article we will configure OpenStack Swift to use Ceph as a storage backend. Object of cloud storage is one of the main services provided by OpenStack. Swift is an object storage protocol and implementation. It has been around for quite a while but is fairly limited (it uses rsync to replicate data, scaling rings can be problematic and it only supports object storage to just mention a few things). OpenStack needs to provide storage for many use cases such as block (Cinder), block (Glance), file (Manila), block (Nova) and object (Swift). Ceph is a distributed software-defined storage system that scales with OpenStack and provides all these use cases. As such it is the defacto standard for OpenStack and is why you see in OpenStack user survey that Ceph is 60% of all OpenStack storage.

OpenStack uses Keystone to store service endpoints for all services. Swift has a Keystone endpoint that authenticates OpenStack tenants to Swift providing object or cloud storage on a per-tenant basis. As mentioned, Ceph provides block, file and object access. In the case of object Ceph provides S3, Swift and NFS interfaces. The RADOS Gateway (RGW) provides object interfaces for Ceph. S3 and Swift users are stored in the RGW. Usually you would want several RADOS GWs in an active/active configuration using a load balancer. OpenStack tenants can be given automatic access and their Keystone tenant ids are automatically configured in the RADOS GW when Swift object storage is accessed from given tenant.

Using Ceph with OpenStack for object storage provides tenants access to cloud storage, integrated with OpenStack using swift and automatically handles authentication of OpenStack tenants. It also provides advantage that external users or tenants (outside of OpenStack) such as application developers can access object storage directly with protocol of choice: S3, Swift or NFS.

Integrating Ceph with OpenStack Series:

- Integrating Ceph with OpenStack Cinder, Glance and Nova

- Integrating Ceph with Swift

- Integrating Ceph with Manila

In order to integrate OpenStack Swift with Ceph you need to first follow below prerequisites:

OpenStack Configuration

In OpenStack we need to delete the already existing Swift endpoint and add a new one that points to RADOS Gateway (RGW).

[OpenStack Controller]

Delete Existing Swift Endpoint

# openstack endpoint list +----------------------------------+-----------+--------------+----------------+ | ID | Region | Service Name | Service Type | +----------------------------------+-----------+--------------+----------------+ | 8a13a475c4904fc3a3b967e3118ea996 | RegionOne | nova | compute | | d9741ef7fe024cb48566530fb0d69981 | RegionOne | aodh | alarming | | 3c841904adf84e678d23400d713fed09 | RegionOne | cinderv3 | volumev3 | | f685c9fa042448cd962828b99f124c4b | RegionOne | cinder | volume | | 07d77cefad0049b1ae5e1eb6692ebfa1| RegionOne | swift | object-store | | dd14ff462f4a4bfbb260aa60562ca225 | RegionOne | heat-cfn | cloudformation | | dbac05cbfb174b048edfb78356532201 | RegionOne | keystone | identity | | b3f56e1257de4268be9f003936fe32d7 | RegionOne | gnocchi | metric | | a103977deb8f45389f1b6fa8705fdef1 | RegionOne | neutron | network | | baa32d390ab64d48a67b74959ff9951e | RegionOne | manila | share | | 421bc582aefd493ebf2d7377d565954e | RegionOne | cinderv2 | volumev2 | | e84a94f373dd45efb069f7060d81a166 | RegionOne | glance | image | | 4200e6b1ae7448369a19bbe7295091e8 | RegionOne | manilav2 | sharev2 | | b4446a635fc246d093c875805e08225f | RegionOne | ceilometer | metering | | 014695a1e8f54830ad267f272552fe78 | RegionOne | heat | orchestration | +----------------------------------+-----------+--------------+----------------+

# openstack endpoint delete 07d77cefad0049b1ae5e1eb6692ebfa1

Note: if endpoint fails to delete you can manually remove it from Keystone database

# mysql keystone

MariaDB [keystone]> delete from endpoint where id="07d77cefad0049b1ae5e1eb6692ebfa1";

Create Swift Endpoint

If Swift wasn't previously installed then you also need to create a Swift service in Keystone.

# openstack endpoint create --region RegionOne --publicurl \ "http://192.168.122.81:8080/swift/v1" --adminurl "http://192.168.122.81:8080/swift/v1" \ --internalurl "http://192.168.122.81:8080/swift/v1" swift

Show the endpoint

# openstack endpoint show d30b81fec7f0406e9c23156745074d6b +--------------+-------------------------------------+ | Field | Value | +--------------+-------------------------------------+ | adminurl | http://192.168.122.81:8080/swift/v1 | | enabled | True | | id | d30b81fec7f0406e9c23156745074d6b | | internalurl | http://192.168.122.81:8080/swift/v1 | | publicurl | http://192.168.122.81:8080/swift/v1 | | region | RegionOne | | service_id | bce5f3fdb05e46589d19b915e0e9ac53 | | service_name | swift | | service_type | object-store | +--------------+-------------------------------------+

Ceph Configuration

In Ceph we need to configure the RADOS Gateway. The RGW will authenticate OpenStack tenants accessing the object storage from OpenStack using Keystone. As such we need to configure access to Keystone.

[Ceph Monitors]

# vi /etc/ceph/ceph.conf

...

[client.rgw.ceph3]

host = ceph3

keyring = /var/lib/ceph/radosgw/ceph-rgw.ceph3/keyring

rgw socket path = /tmp/radosgw-ceph3.sock

log file = /var/log/ceph/ceph-rgw-ceph3.log

rgw data = /var/lib/ceph/radosgw/ceph-rgw.ceph3

rgw frontends = civetweb port=8080

# Keystone information

rgw_keystone_url = http://192.168.122.80:5000

rgw_keystone_admin_user = admin

rgw_keystone_admin_password = redhat01

rgw_keystone_admin_tenant = admin

rgw_keystone_accepted_roles = admin

rgw_keystone_token_cache_size = 10

rgw_keystone_revocation_interval = 300

rgw_keystone_make_new_tenants = true

rgw_s3_auth_use_keystone = true

#nss_db_path = {path to nss db}

rgw_keystone_verify_ssl = false

...

Note: In this example we are setting ssl verify to false so we aren't using ssl certificates.

Copy the /etc/ceph/ceph.conf to all nodes in Ceph cluster.

[RGW Nodes]

Restart all RGWs by finding the service name and then restarting on all nodes running RGW.

# systemctl status |grep radosgw │ └─64133 grep --color=auto radosgw ├─system-ceph\x2dradosgw.slice │ └─ceph-radosgw@rgw.ceph1.service │ └─49414 /usr/bin/radosgw -f --cluster ceph --name client.rgw.ceph1 --setuser ceph --setgroup ceph

# systemctl restart ceph-radosgw@rgw.ceph1.service

Validating Swift

Finally now that things are configured we can test access the object store through a tenant in OpenStack. For this I have created a tenant named "Test" and a tenant user that has access to "Test" project named "keith".

[OpenStack Controller]

Show User Info

# openstack user show keith +------------+----------------------------------+ | Field | Value | +------------+----------------------------------+ | enabled | True | | id | ff29eea35c234ae08fbd0c124ae1cdca | | name | keith | | project_id | bba8cef1e00744e48ef10d3b68ec8002 | | username | keith | +------------+----------------------------------+

Note: pay attention to the project or tenant id.

Upload File to Swift

Authenticate as user keith.

# source keystonerc_keith

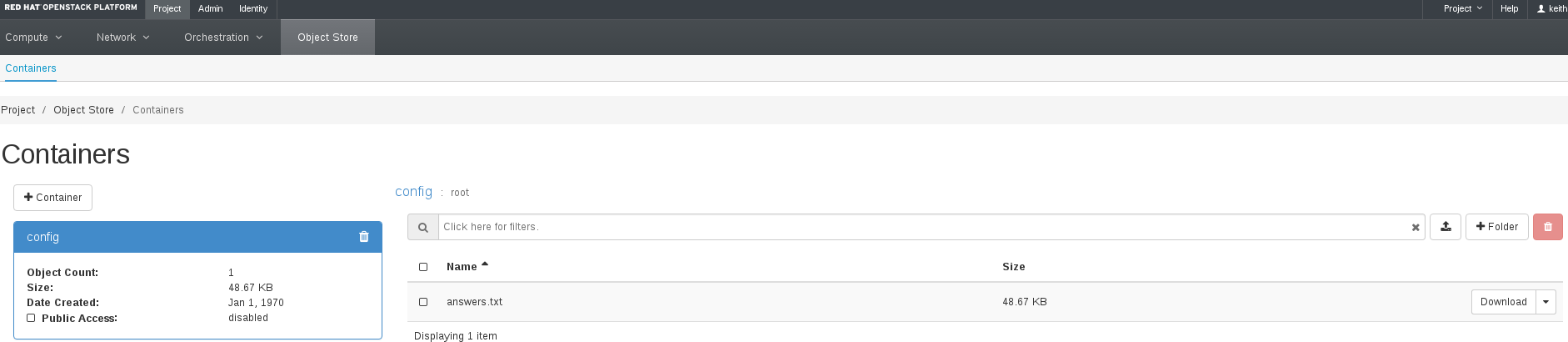

Upload file /root/answers.txt to a container called config. If container doesn't exist it is created.

# swift upload config /root/answers.txt root/answers.txt

List contents of container.

# swift list config root/answers.txt

Show contents of container in Horizon.

Show OpenStack Tenant in RADOS Gateway

As mentioned the OpenStack tenant was automatically created as a user in the RGW.

[RGW Nodes]

# radosgw-admin metadata list user [ "b22c788f00a14d718fd6b6986987b7c1", "bba8cef1e00744e48ef10d3b68ec8002", "29f6ba825bf5418395919c85874db4a5", "s3user" ]

List Info for tenant user.

# radosgw-admin user info --uid="bba8cef1e00744e48ef10d3b68ec8002"

{

"user_id": "bba8cef1e00744e48ef10d3b68ec8002",

"display_name": "Test",

"email": "",

"suspended": 0,

"max_buckets": 1000,

"auid": 0,

"subusers": [],

"keys": [],

"swift_keys": [],

"caps": [],

"op_mask": "read, write, delete",

"default_placement": "",

"placement_tags": [],

"bucket_quota": {

"enabled": false,

"max_size_kb": -1,

"max_objects": -1

},

"user_quota": {

"enabled": false,

"max_size_kb": -1,

"max_objects": -1

},

"temp_url_keys": []

}

Summary

In this article we learned how to configure OpenStack Swift to use Ceph as a storage backend for tenant cloud storage. We briefly discussed the value of using Ceph instead of Swift for OpenStack object storage. Finally we saw how user management works between Keystone and the RADOS Gateway in OpenStack. I hope you enjoyed this article and found it of use. Comments are more than welcome so don't be shy.

Happy Swifting!

(c) 2017 Keith Tenzer