OpenStack: Integrating Ceph as Storage Backend

Overview

In this article we will discuss why Ceph is Perfect fit for OpenStack. We will see how to integrate three prominent OpenStack use cases with Ceph: Cinder (block storage), Glance (images) and Nova (VM virtual disks).

Integrating Ceph with OpenStack Series:

- Integrating Ceph with OpenStack Cinder, Glance and Nova

- Integrating Ceph with Swift

- Integrating Ceph with Manila

Ceph provides unified scale-out storage, using commodity x86 hardware, that is self-healing and intelligently anticipates failures. It has become the defacto standard for software-defined storage. Ceph being an OpenSource project has enabled many vendors the ability to provide Ceph based software-defined storage systems. Ceph is not just limited to Companies like Red Hat, Suse, Mirantis, Ubuntu, etc. Integrated solutions from SanDisk, Fujitsu, HP, Dell, Samsung and many more exist today. There are even large-scale community built environments, Cern comes to mind, that provide storage services for 10,000s of VMs.

Ceph is by no means limited to OpenStack, however this is where Ceph started gaining traction. Looking at latest OpenStack user survey, Ceph is by a large margin the clear leader for OpenStack storage. Page 42 in OpenStack April 2016 User Survey reveals Ceph is 57% of OpenStack storage. The next is LVM (local storage) with 28% followed by NetApp with 9%. If we remove LVM, Ceph leads any other storage company by 48%, that is incredible. Why is that?

There are several reasons but I will give you my top three:

- Ceph is a scale-out unified storage platform. OpenStack needs two things from storage: ability to scale with OpenStack itself and do so regardless of block (Cinder), File (Manila) or Object (Swift). Traditional storage vendors need to provide two or three different storage systems to achieve this. They don't scale the same and in most cases only scale-up in never-ending migration cycles. Their management capabilities are never truly integrated across broad spectrum of storage use-cases.

- Ceph is cost-effective. Ceph leverages Linux as an operating system instead of something proprietary. You can choose not only whom you purchase Ceph from, but also where you get your hardware. It can be same vendor or different. You can purchase commodity hardware, or even buy integrated solution of Ceph + Hardware from single vendor. There are even hyper-converged options for Ceph that are emerging (running Ceph services on compute nodes).

- Ceph is OpenSource project just like OpenStack. This allows for a much tighter integration and cross-project development. Proprietary vendors are always playing catch-up since they have secrets to protect and their influence is usually limited in Opensource communities, especially in OpenStack context.

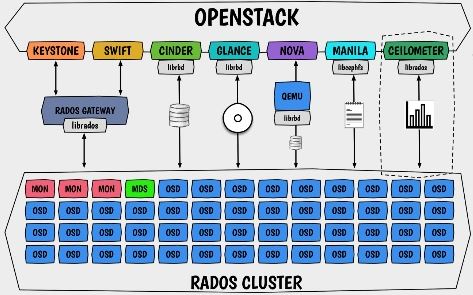

Below is an architecture Diagram that shows all the different OpenStack components that need storage. It shows how they integrate with Ceph and how Ceph provides a unified storage system that scales to fill all these use cases.

source: Red Hat Summit 2016

If you are interested in more topics relating to ceph and OpenStack I recommend following: http://ceph.com/category/ceph-and-openstack/

Ok enough talking about why Ceph and OpenStack are so great, lets get our hands dirty and see how to hook it up!

If you don't have a Ceph environment you can follow this article on how to set one up quickly.

Glance Integration

Glance is the image service within OpenStack. By default images are stored locally on controllers and then copied to compute hosts when requested. The compute hosts cache the images but they need to be copied again, every time an image is updated.

Ceph provides backend for Glance allowing images to be stored in Ceph, instead of locally on controller and compute nodes. This greatly reduces network traffic for pulling images and increases performance since Ceph can clone images instead of copying them. In addition it makes migrating between OpenStack deployments or concepts like multi-site OpenStack much simpler.

Install ceph client used by Glance.

[root@osp9 ~]# yum install -y python-rbd

Create Ceph user and set home directory to /etc/ceph.

[root@osp9 ~]# mkdir /etc/ceph [root@osp9 ~]# useradd ceph [root@osp9 ~]# passwd ceph

Add ceph user to sudoers.

cat << EOF >/etc/sudoers.d/ceph ceph ALL = (root) NOPASSWD:ALL Defaults:ceph !requiretty EOF

On Ceph admin node.

Create Ceph RBD Pool for Glance images.

[ceph@ceph1 ~]$ sudo ceph osd pool create images 128

Create keyring that will allow Glance access to pool.

[ceph@ceph1 ~]$ sudo ceph auth get-or-create client.images mon 'allow r' osd 'allow class-read object_prefix rdb_children, allow rwx pool=images' -o /etc/ceph/ceph.client.images.keyring

Copy the keyring to /etc/ceph on OpenStack controller.

[ceph@ceph1 ~]$ scp /etc/ceph/ceph.client.images.keyring root@osp9.lab:/etc/ceph

Copy /etc/ceph/ceph.conf configuration to OpenStack controller.

[ceph@ceph1 ~]$ scp /etc/ceph/ceph.conf root@osp9.lab:/etc/ceph

Set permissions so Glance can access Ceph keyring.

[root@osp9 ~(keystone_admin)]# chgrp glance /etc/ceph/ceph.client.images.keyring

[root@osp9 ~(keystone_admin)]#chmod 0640 /etc/ceph/ceph.client.images.keyring

Add keyring file to Ceph configuration.

[root@osp9 ~(keystone_admin)]# vi /etc/ceph/ceph.conf [client.images] keyring = /etc/ceph/ceph.client.images.keyring

Create backup of original Glance configuration.

[root@osp9 ~(keystone_admin)]# cp /etc/glance/glance-api.conf /etc/glance/glance-api.conf.orig

Update Glance configuration.

[root@osp9 ~]# vi /etc/glance/glance-api.conf [glance_store] stores = glance.store.rbd.Store default_store = rbd rbd_store_pool = images rbd_store_user = images rbd_store_ceph_conf = /etc/ceph/ceph.conf

Restart Glance.

[root@osp9 ~(keystone_admin)]# systemctl restart openstack-glance-api

Download Cirros images and add it into Glance.

[root@osp9 ~(keystone_admin)]# wget http://download.cirros-cloud.net/0.3.4/cirros-0.3.4-x86_64-disk.img

Convert QCOW2 to RAW. It is recommended for Ceph to always use RAW format.

[root@osp9 ~(keystone_admin)]#qemu-img convert cirros-0.3.4-x86_64-disk.img cirros-0.3.4-x86_64-disk.raw

Add image to Glance.

[root@osp9 ~(keystone_admin)]#glance image-create --name "Cirros 0.3.4" --disk-format raw --container-format bare --visibility public --file cirros-0.3.4-x86_64-disk.raw

+------------------+--------------------------------------+ | Property | Value | +------------------+--------------------------------------+ | checksum | ee1eca47dc88f4879d8a229cc70a07c6 | | container_format | bare | | created_at | 2016-09-07T12:29:23Z | | disk_format | qcow2 | | id | a55e9417-67af-43c5-a342-85d2c4c483f7 | | min_disk | 0 | | min_ram | 0 | | name | Cirros 0.3.4 | | owner | dd6a4ed994d04255a451da66b68a8057 | | protected | False | | size | 13287936 | | status | active | | tags | [] | | updated_at | 2016-09-07T12:29:27Z | | virtual_size | None | | visibility | public | +------------------+--------------------------------------+

Check that glance image exists in Ceph.

[ceph@ceph1 ceph-config]$ sudo rbd ls images a55e9417-67af-43c5-a342-85d2c4c483f7

[ceph@ceph1 ceph-config]$ sudo rbd info images/a55e9417-67af-43c5-a342-85d2c4c483f7 rbd image 'a55e9417-67af-43c5-a342-85d2c4c483f7': size 12976 kB in 2 objects order 23 (8192 kB objects) block_name_prefix: rbd_data.183e54fd29b46 format: 2 features: layering, striping flags: stripe unit: 8192 kB stripe count: 1

Cinder Integration

Cinder is the block storage service in OpenStack. Cinder provides an abstraction around block storage and allows vendors to integrate by providing a driver. In Ceph, each storage pool can be mapped to a different Cinder backend. This allows for creating storage services such as gold, silver or bronze. You can decide for example that gold should be fast SSD disks that are replicated three times, while silver only should be replicated two times and bronze should use slower disks with erasure coding.

Create Ceph pool for cinder volumes.

[ceph@ceph1 ~]$ sudo ceph osd pool create volumes 128

Create keyring to grant cinder access.

[ceph@ceph1 ~]$ sudo ceph auth get-or-create client.volumes mon 'allow r' osd 'allow class-read object_prefix rbd_children, allow rwx pool=volumes, allow rx pool=images' -o /etc/ceph/ceph.client.volumes.keyring

Copy keyring to OpenStack controllers.

[ceph@ceph1 ~]$ scp /etc/ceph/ceph.client.volumes.keyring root@osp9.lab:/etc/ceph

Create file that contains just the authentication key on OpenStack controllers.

[ceph@ceph1 ~]$ sudo ceph auth get-key client.volumes |ssh osp9.lab tee client.volumes.key

Set permissions on keyring file so it can be accessed by Cinder.

[root@osp9 ~(keystone_admin)]# chgrp cinder /etc/ceph/ceph.client.volumes.keyring [root@osp9 ~(keystone_admin)]# chmod 0640 /etc/ceph/ceph.client.volumes.keyring

Add keyring to Ceph configuration file on OpenStack controllers.

[root@osp9 ~(keystone_admin)]#vi /etc/ceph/ceph.conf [client.volumes] keyring = /etc/ceph/ceph.client.volumes.keyring

Give KVM Hypervisor access to Ceph.

[root@osp9 ~(keystone_admin)]# uuidgen |tee /etc/ceph/cinder.uuid.txt

Create a secret in virsh so KVM can access Ceph pool for cinder volumes.

[root@osp9 ~(keystone_admin)]#vi /etc/ceph/cinder.xml <secret ephemeral="no" private="no"> <uuid>ce6d1549-4d63-476b-afb6-88f0b196414f</uuid> <usage type="ceph"> <name>client.volumes secret</name> </usage> </secret>

[root@osp9 ceph]# virsh secret-define --file /etc/ceph/cinder.xml

[root@osp9 ~(keystone_admin)]# virsh secret-set-value --secret ce6d1549-4d63-476b-afb6-88f0b196414f --base64 $(cat /etc/ceph/client.volumes.key)

Add Ceph backend for Cinder.

[root@osp9 ~(keystone_admin)]#vi /etc/cinder/cinder.conf [rbd] volume_driver = cinder.volume.drivers.rbd.RBDDriver rbd_pool = volumes rbd_ceph_conf = /etc/ceph/ceph.conf rbd_flatten_volume_from_snapshot = false rbd_max_clone_depth = 5 rbd_store_chunk_size = 4 rados_connect_timeout = -1 glance_api_version = 2 rbd_user = volumes rbd_secret_uuid = ce6d1549-4d63-476b-afb6-88f0b196414f

Restart Cinder service on all controllers.

[root@osp9 ~(keystone_admin)]# openstack-service restart cinder

Create a cinder volume.

[root@osp9 ~(keystone_admin)]# cinder create --display-name="test" 1

+--------------------------------+--------------------------------------+

| Property | Value |

+--------------------------------+--------------------------------------+

| attachments | [] |

| availability_zone | nova |

| bootable | false |

| consistencygroup_id | None |

| created_at | 2016-09-08T10:58:17.000000 |

| description | None |

| encrypted | False |

| id | d251bb74-5c5c-4c40-a15b-2a4a17bbed8b |

| metadata | {} |

| migration_status | None |

| multiattach | False |

| name | test |

| os-vol-host-attr:host | None |

| os-vol-mig-status-attr:migstat | None |

| os-vol-mig-status-attr:name_id | None |

| os-vol-tenant-attr:tenant_id | dd6a4ed994d04255a451da66b68a8057 |

| replication_status | disabled |

| size | 1 |

| snapshot_id | None |

| source_volid | None |

| status | creating |

| updated_at | None |

| user_id | 783d6e51e611400c80458de5d735897e |

| volume_type | None |

+--------------------------------+--------------------------------------+

List new cinder volume

[root@osp9 ~(keystone_admin)]# cinder list

+--------------------------------------+-----------+------+------+-------------+----------+-------------+

| ID | Status | Name | Size | Volume Type | Bootable | Attached to |

+--------------------------------------+-----------+------+------+-------------+----------+-------------+

| d251bb74-5c5c-4c40-a15b-2a4a17bbed8b | available | test | 1 | - | false | |

+--------------------------------------+-----------+------+------+-------------+----------+-------------+

List cinder volume in ceph.

[ceph@ceph1 ~]$ sudo rbd ls volumes volume-d251bb74-5c5c-4c40-a15b-2a4a17bbed8b

[ceph@ceph1 ~]$ sudo rbd info volumes/volume-d251bb74-5c5c-4c40-a15b-2a4a17bbed8b rbd image 'volume-d251bb74-5c5c-4c40-a15b-2a4a17bbed8b': size 1024 MB in 256 objects order 22 (4096 kB objects) block_name_prefix: rbd_data.2033b50c26d41 format: 2 features: layering, striping flags: stripe unit: 4096 kB stripe count: 1

Integrating Ceph with Nova Compute

Nova is the compute service within OpenStack. Nova stores virtual disks images associated with running VMs by default, locally on Hypervisor under /var/lib/nova/instances. There are a few drawbacks to using local storage on compute nodes for virtual disk images:

- Images are stored under root filesystem. Large images can cause filesystem to fill up, thus crashing compute nodes.

- A disk crash on compute node could cause loss of virtual disk and as such a VM recovery would be impossible.

Ceph is one of the storage backends that can integrate directly with Nova. In this section we will see how to configure that.

[ceph@ceph1 ~]$ sudo ceph osd pool create vms 128

Create authentication keyring for Nova.

[ceph@ceph1 ~]$ sudo ceph auth get-or-create client.nova mon 'allow r' osd 'allow class-read object_prefix rbd_children, allow rwx pool=vms, allow rx pool=images' -o /etc/ceph/ceph.client.nova.keyring

Copy keyring to OpenStack controllers.

[ceph@ceph1 ~]$ scp /etc/ceph/ceph.client.nova.keyring root@osp9.lab:/etc/ceph

Create key file on OpenStack controllers.

[ceph@ceph1 ~]$ sudo ceph auth get-key client.nova |ssh osp9.lab tee client.nova.key

Set permissions on keyring file so it can be accessed by Nova service.

[root@osp9 ~(keystone_admin)]# chgrp nova /etc/ceph/ceph.client.nova.keyring [root@osp9 ~(keystone_admin)]# chmod 0640 /etc/ceph/ceph.client.nova.keyring

Ensure the required rpm packages are installed.

[root@osp9 ~(keystone_admin)]# yum list installed python-rbd ceph-common Loaded plugins: product-id, search-disabled-repos, subscription-manager Installed Packages ceph-common.x86_64 1:0.94.5-15.el7cp @rhel-7-server-rhceph-1.3-mon-rpms python-rbd.x86_64 1:0.94.5-15.el7cp @rhel-7-server-rhceph-1.3-mon-rpms

Update Ceph configuration.

[root@osp9 ~(keystone_admin)]#vi /etc/ceph/ceph.conf [client.nova] keyring = /etc/ceph/ceph.client.nova.keyring

Give KVM access to Ceph.

[root@osp9 ~(keystone_admin)]# uuidgen |tee /etc/ceph/nova.uuid.txt

Create a secret in virsh so KVM can access Ceph pool for cinder volumes.

[root@osp9 ~(keystone_admin)]#vi /etc/ceph/nova.xml <secret ephemeral="no" private="no"> <uuid>c89c0a90-9648-49eb-b443-c97adb538f23</uuid> <usage type="ceph"> <name>client.volumes secret</name> </usage> </secret>

[root@osp9 ~(keystone_admin)]# virsh secret-define --file /etc/ceph/nova.xml

[root@osp9 ~(keystone_admin)]# virsh secret-set-value --secret c89c0a90-9648-49eb-b443-c97adb538f23 --base64 $(cat /etc/ceph/client.nova.key)

Make backup of Nova configuration.

[root@osp9 ~(keystone_admin)]# cp /etc/nova/nova.conf /etc/nova/nova.conf.orig

Update Nova configuration to use Ceph backend.

[root@osp9 ~(keystone_admin)]#vi /etc/nova/nova.conf force_raw_images = True disk_cachemodes = writeback [libvirt] images_type = rbd images_rbd_pool = vms images_rbd_ceph_conf = /etc/ceph/ceph.conf rbd_user = vms rbd_secret_uuid = c89c0a90-9648-49eb-b443-c97adb538f23

Restart Nova services.

[root@osp9 ~(keystone_admin)]# systemctl restart openstack-nova-compute

List Neutron networks.

[root@osp9 ~(keystone_admin)]# neutron net-list +--------------------------------------+---------+-----------------------------------------------------+ | id | name | subnets | +--------------------------------------+---------+-----------------------------------------------------+ | 4683d03d-30fc-4dd1-9b5f-eccd87340e70 | private | ef909061-34ee-4b67-80a9-829ea0a862d0 10.10.1.0/24 | | 8d35a938-5e4f-46a2-8948-b8c4d752e81e | public | bb2b65e7-ab41-4792-8591-91507784b8d8 192.168.0.0/24 | +--------------------------------------+---------+-----------------------------------------------------+

Start ephemeral VM instance using Cirros image that was added in the steps for Glance.

[root@osp9 ~(keystone_admin)]# nova boot --flavor m1.small --nic net-id=4683d03d-30fc-4dd1-9b5f-eccd87340e70 --image='Cirros 0.3.4' cephvm

+--------------------------------------+-----------------------------------------------------+

| Property | Value |

+--------------------------------------+-----------------------------------------------------+

| OS-DCF:diskConfig | MANUAL |

| OS-EXT-AZ:availability_zone | |

| OS-EXT-SRV-ATTR:host | - |

| OS-EXT-SRV-ATTR:hypervisor_hostname | - |

| OS-EXT-SRV-ATTR:instance_name | instance-00000001 |

| OS-EXT-STS:power_state | 0 |

| OS-EXT-STS:task_state | scheduling |

| OS-EXT-STS:vm_state | building |

| OS-SRV-USG:launched_at | - |

| OS-SRV-USG:terminated_at | - |

| accessIPv4 | |

| accessIPv6 | |

| adminPass | wzKrvK3miVJ3 |

| config_drive | |

| created | 2016-09-08T11:41:29Z |

| flavor | m1.small (2) |

| hostId | |

| id | 85c66004-e8c6-497e-b5d3-b949a1666c90 |

| image | Cirros 0.3.4 (a55e9417-67af-43c5-a342-85d2c4c483f7) |

| key_name | - |

| metadata | {} |

| name | cephvm |

| os-extended-volumes:volumes_attached | [] |

| progress | 0 |

| security_groups | default |

| status | BUILD |

| tenant_id | dd6a4ed994d04255a451da66b68a8057 |

| updated | 2016-09-08T11:41:33Z |

| user_id | 783d6e51e611400c80458de5d735897e |

+--------------------------------------+-----------------------------------------------------+

Wait until the VM is active.

[root@osp9 ceph(keystone_admin)]# nova list +--------------------------------------+--------+--------+------------+-------------+---------------------+ | ID | Name | Status | Task State | Power State | Networks | +--------------------------------------+--------+--------+------------+-------------+---------------------+ | 8ca3e74e-cd52-42a6-acec-13a5b8bda53c | cephvm | ACTIVE | - | Running | private=10.10.1.106 | +--------------------------------------+--------+--------+------------+-------------+---------------------+

List images in Ceph vms pool. We should now see the image is stored in Ceph.

[ceph@ceph1 ~]$ sudo rbd -p vms ls 8ca3e74e-cd52-42a6-acec-13a5b8bda53c_disk

Troubleshooting

Unable to delete Glance Images stored in Ceph RBD

[root@osp9 ceph(keystone_admin)]# nova image-list +--------------------------------------+--------------+--------+--------+ | ID | Name | Status | Server | +--------------------------------------+--------------+--------+--------+ | a55e9417-67af-43c5-a342-85d2c4c483f7 | Cirros 0.3.4 | ACTIVE | | | 34510bb3-da95-4cb1-8a66-59f572ec0a5d | test123 | ACTIVE | | | cf56345e-1454-4775-84f6-781912ce242b | test456 | ACTIVE | | +--------------------------------------+--------------+--------+--------+

[root@osp9 ceph(keystone_admin)]# rbd -p images snap unprotect cf56345e-1454-4775-84f6-781912ce242b@snap [root@osp9 ceph(keystone_admin)]# rbd -p images snap rm cf56345e-1454-4775-84f6-781912ce242b@snap [root@osp9 ceph(keystone_admin)]# glance image-delete cf56345e-1454-4775-84f6-781912ce242b

Summary

In this article we discussed how OpenStack and Ceph fit perfectly together. We discussed some of the use cases around glance, cinder and nova. Finally we went through steps to integrate Ceph with those use cases. I hope you enjoyed the article and found the information useful. Please share your feedback.

Happy Cephing!

(c) 2016 Keith Tenzer